Over the last few years we have been collaborating with local occupational therapist Annabelle Hippisley using the Eyelink 1000 eye tracker to examine eye movement control in some of the children she sees in her practice.

Therapeutic approaches in occupational therapy are strongly influenced by the work of American psychologist Jean Ayres, who suggested that difficulties experienced by children in conditions such as Autism are due to problems integrating sensory information. This inspired a therapeutic approach which emphasises developing sensorimotor coordination via playful activities such as ball catching, swings and obstacle courses.

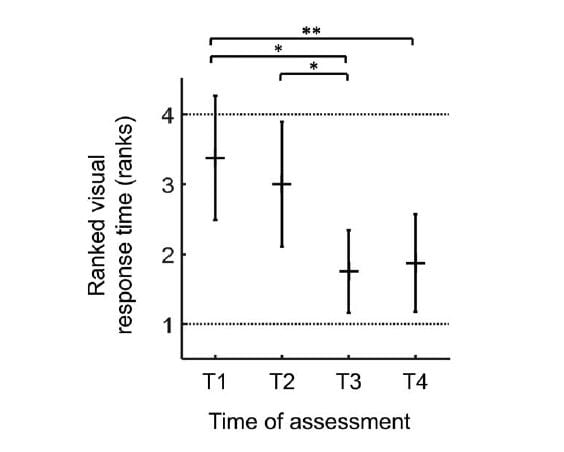

Despite its influence in practice, Ayres ideas have received little attention from university academic researchers. As a step towards addressing this, some of Annabelle’s clients have been taking part in research using our Eyelink eye tracker to play eye movement “games” which measure the speed and accuracy of different types of movement, including saccades (rapid eye movements used to shift the eyes from one object to another), smooth pursuit (keeping eyes fixed on moving object) and sustained fixation (keeping eyes stationary).

A research paper reporting the detailed findings of the research is currently under review, but in the meantime we would like to say thank you to all the children and parents who took part and watch this space for more details on the findings and implications of the research!